Whether you love it or hate it, Artificial Intelligence (AI), and content AI specifically, is here to stay and dominating chatter on the airwaves, and from classroom to boardroom.

ChatGPT, the most reported on AI platform, was crowned the fastest-growing consumer app in history by Swiss bank UBS in a 3 February analyst note based on SimilarWeb data.

We’re talking about an estimated 100 million active users in January, just two months after the launch. For reference, TikTok (the previous title holder) took nine months to hit that number; Instagram, two and a half years.

(Click here or scroll to the end to see our email newsletter vs the ChatGPT version...)

At BlueChip’s inaugural Summer Soiree, we drilled Danielle Stitt on content AI and chat GPT and the potential impact on communication, marketing and financial services.

Here are our top 3 takeaways:

What is content AI? Why should we care about it and how might it be used within the financial services industry?

A: Content AI is the use of artificial intelligence techniques such as natural language processing, machine learning, and deep learning to create human-like content such as written copy, images, audio, and video.

But it goes much deeper than that.

Content AI now has the capacity to generate a wide variety of content, mimicking news articles and social media posts to product descriptions and marketing materials. Though automated, the content created is almost inseparable from human-generated content, and the gap between the two continues to narrow the more the machines continue to learn and evolve (cue title sequence for Terminator 2)

Jokes aside, the capacity for content AI in the workplace is, as it stands, untapped. Having the ability to automate creative tasks significantly reduces the reliance on otherwise expensive human hours, allowing companies to create and distribute more content in less time. Even if the automated content is not completely up to scratch, it’s sophisticated enough to get it 70% of the way there before a human takes over.

It might surprise you to learn that several high-profile publications already use AI to generate content, too. Forbes uses a tool called Quill to write earning reports, and The Associated Press uses Wordsmith to write thousands of articles about finance and sport. These systems can’t produce articles autonomously just yet – to get something legible out of them, someone has to feed in statistics. Each system, which has learnt basic rules about grammar and structure, then determines what data has the most weight and repurposes it into an article.

For financial services organisations, I can see content AI platforms like Chat GPT, Jasper, Rytr, copy ai, Writesonic, etc, help inform credit decisions, risk management using structured & unstructured data sets, fraud prevention such as credit card fraud when it flags a charge outside the normal spending patterns, and as a driver of high-frequency trading which looks at social media content for example, customer service and personalisation.

What impact might we see from content AI on financial services companies’ and leaders’ reputations?

In short, significant.

On the positive side, content AI has the potential to help everyone from insurers and superannuation funds, to fund managers and retail financial services organisations completely streamline communication and improve the customer experience. Just one example is that software like Chatbots, automated replies, and personalised email content curation are massive time savers and should allow the customer service team more time to handle less common or trickier questions.

On the flip side, I think there are significant risks operationally and reputationally. A simple example is that right now AI-generated content can come across as insincere or robotic. A case in point is the online American media giant CNET being outed for using content AP to write articles on personal finance and then forced to issue public corrections on more than half of the stories because they had published with fairly serious plagiarism and errors.

Content AI also raises concerns about data privacy, security, and ethical dilemma related to the use of automated decision-making algorithms. Financial services companies and leaders need to be very transparent about how they collect, store, and use data, and ensure any AI-powered communication strategies are aligned with their values, principles, and regulatory requirements at all levels. Failure to do so can seriously damage reputation and erode trust amongst stakeholders.

What might be some potential risks for financial services businesses using content AI? Might the use of content AI lead to an increase in cyber risk?

One potential risk is the security of the AI system itself. If the AI system is not properly secured, it could be vulnerable to cyber-attacks, such as data breaches, malware infections, or denial-of-service attacks. Hackers could exploit vulnerabilities in the AI system to steal sensitive data or disrupt business operations.

Another risk is the quality of the content generated by an artificial intelligence system like ChatGPT. If the AI system is not properly trained or supervised, it could generate inaccurate, misleading, or inappropriate content, which could harm the reputation of the financial services business or even lead to legal or regulatory issues at scale.

To reduce these risks, financial services businesses should ensure that their AI systems are properly secured, trained, and monitored. This will include regular vulnerability testing of any processes which involve artificial intelligence and putting in place safeguards such as access controls, data encryption, and intrusion detection systems. If you haven’t already you’re going to need to go further than just having procedures to respond to an (inevitable) cyber breach to have a scenario planned around AI and ideally practiced the management team’s response. There’s not much point doing, say, penetration testing if you haven’t also operationally tested the management response to the kind of novel ethical dilemma artificial intelligence, ChatGPT and cybercrime will present.

(Wo)man vs the machine

In a parallel universe, ChatGPT also wrote our latest edition of 'Take a beat Tuesday' - our monthly newsletter. See how it did below and how it weighs up against our efforts.

Dear {First Name}

Whether you love it or hate it, Artificial Intelligence (AI), and content AI specifically, is here to stay and dominating chatter on the airwaves, and from classroom to boardroom.

Read on for a free future-proof umbrella…

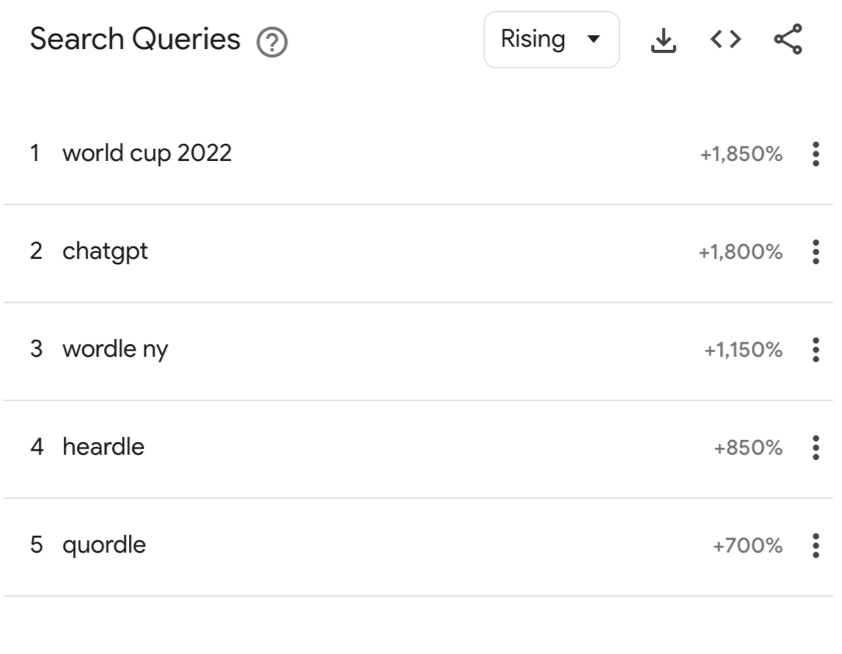

While I don’t love what search trends tell us about the national character (that would be NRL scores and Cocaine Bear last month) the surge of interest in content AI is mirrored in search trends. This screenshot (taken at AEDST 3.27pm yesterday) shows Google Trends (searches) in Australia over the last year.

ChatGPT, the most reported on AI platform, was crowned the fastest-growing consumer app in history by Swiss bank UBS in a 3 February analyst note based on SimilarWeb data.

We’re talking about an estimated 100 million active users in January, just two months after the launch. For reference, TikTok (the previous title holder) took nine months to hit that number; Instagram, two and a half years.

When asked to summarise its own potential impact on the financial services sector in one sentence, ChatGPT told us “increasing customer engagement, streamlining and accelerating content production and processes, and influencing decision-making through personalised and relevant content, automation of mundane tasks, and data analysis”.

Right then. So, I’m thinking “Hasta la vista” for me professionally. And you maybe?

We asked this and other burning questions of a resident expert who has one foot in the tech sector.

Danielle Stitt, if you don’t know her, is BlueChip’s former CEO, and an engineer who has both a Masters in banking and finance and 30+ years in marketing. She’s currently Digital Strategist At Large, and my go-to on disruptive communication technologies.

At BlueChip’s inaugural Summer Soiree, we drilled Dan on content AI and the potential impact on communication, marketing and financial services.

Here are my top 3 takeaways:

1. Expect financial services businesses to scale content and 1:1 communication like never beforeIf you need an analogy, think about how social media and email opened up, and sped up, scaled and direct channels to audiences – away from media, snail mail and outbound calling. This will be faster.

2. There is good and bad in content AI, with particular ethical and operational issues to resolve

3. Get learning

For now humans have the first and final say but don’t take that for granted.

You’ll find the longer version, a one-page summary or 3-minute read here.

In a parallel universe, ChatGPT also wrote this edition of TABT. You’ll find that here in a separate blog post marked [WARNING: CONTENT AI GENERATED CONTENT].

Please tell me you saw this coming!

It says a lot that the AI version lacks a lot of Dan Stitt’s analysis and our “so what?”.

The CHAT GPT version also lacks my spicy top-and-tail additions.

Yes, ChatGPT can explain quantum physics or write a poem on command, but a full AI takeover isn't exactly imminent.

And it doesn’t reward you with a free wind- and future-proof BlueChip umbrella (claim that here) for reading to the end. Whatever content AI brings, we’ve got you covered (yes, a Dad joke… clearly not the machine).

Best,

Carden Calder

[WARNING: CONTENT AI GENERATED CONTENT].

Carden's ChatGPT Command: "Write an email to CEOs in financial services explaining the potential use cases, applications, ethical dilemmas and risks of using Content AI

Subject: Exploring the potential of Content AI in financial services"

Output:

Dear CEOs of Financial Services,

I am writing to you to discuss the potential use cases, applications, ethical dilemmas, and risks of using Content AI in financial services.

Content AI refers to artificial intelligence (AI) technologies that are used to generate and optimize content for various channels such as websites, social media, and email marketing. Content AI can help financial services companies to enhance their customer experience, improve their marketing campaigns, and reduce costs.

The potential use cases of Content AI in financial services are many. For example, Content AI can help financial services companies to personalize their communication with customers, provide timely and relevant information to them, and create more effective marketing campaigns. It can also help in automating customer service and support functions, such as chatbots, which can respond to customer inquiries in real-time.

However, the use of Content AI in financial services also raises several ethical dilemmas that need to be addressed. One major concern is the potential for bias in the data sets used to train the AI models. This can lead to discriminatory outcomes, such as denying access to financial services to certain groups of people based on their demographics or other characteristics. It is therefore essential to ensure that the AI models are trained on unbiased data sets, and that the algorithms are transparent and explainable.

Another risk associated with Content AI is the potential for data breaches and cyber-attacks. Financial services companies collect and store a vast amount of sensitive customer information, which can be targeted by hackers. It is therefore crucial to have robust cybersecurity protocols in place to safeguard this data.

In conclusion, the potential use cases and benefits of Content AI in financial services are significant. However, it is essential to address the ethical dilemmas and risks associated with its use. As CEOs of financial services companies, it is imperative to ensure that the AI models used are transparent, explainable, and unbiased, and that adequate measures are taken to safeguard the data of our customers.

Thank you for your attention, and I look forward to hearing your thoughts on this topic.

Sincerely,

[Your Name]